How Independent Verification & Validation Uncovers the Unknown

Leave a CommentWe we think about air travel today, we often associate it with flight delays, long security lines, and uncomfortable seats. However, in the early days of aviation, flying was considered more luxurious.

Perhaps the first plane to provide this type of experience was the de Havilland Comet. The Comet was debuted by British Overseas Airways Corporation (BOAC) in 1952, and came equipped with spacious reclining seats, a galley to serve hot food, table seating for groups, large windows—even a bar. Most importantly, the Comet was the first commercial plane to utilize jet engines, providing a smoother, quieter alternative to the propeller engines that were typical of the time.

A New Era of Aviation

The design of the Comet was one of the most heavily scrutinized processes in the history of aviation, taking six years from the initial proposal before a prototype was designed and built. To ensure the commercial success of the airliner, BOAC specified that the plane required a transatlantic range with room for 36 passengers and a cruising altitude of 40,000 feet. As the plane utilized new engine technology, both the engines and the airframe had to be designed from scratch, and after trying and failing to experiment with a tailless design, eventually the plane began to look more similar to a jet one might see today. After being unable to adequately perform stress testing on the fuselage, de Havilland invested in a water tank to simulate the stress of 40,000 hours of flight time.

When the Comet took its first commercial flight in 1952, every conceivable measure had been taken to ensure that the plane would safely carry passengers for decades. Despite a few incidents early on, it seemed like de Havilland had found a great commercial success. The use of jet engines cut travel times in half, and other airlines were eager to add the new plane to their fleet.

Tragedy Strikes

That success came to a crashing halt on January 10, 1954, when BOAC Flight 781 exploded in mid-air, killing all 35 passengers and crew on board. Immediately following the crash, de Havilland pulled their fleet of Comets until a cause was found. However, as the investigation was ongoing, the company was eager to get their new jet back in the air. After ruling out a bomb, early signs pointed to an engine as a potential source of the explosion, and after retrofitting the planes, the Comet was flying again—just ten weeks after the loss of Flight 781. Barely two weeks after the Comet was reinstated, South African Airways Flight 201 suffered a similar explosion off the coast of Italy, killing all 21 passengers and crew. It was clear that this plane was unsafe to fly until a definitive cause was found.

Engineers at de Havilland and BOAC were baffled—they had ruled out an explosive device as the cause, and could now rule out engine failure as well. After the initial crash, metal fatigue had been waived off as a potential cause. During the design and testing phase, de Havilland had gone above and beyond to simulate the effect of constant takeoff and landing cycles on the plane, and it had well exceeded the safety standards of the time.

The issue, however, was that traditional propeller-based aircraft (with the exception of the Boeing 307) flew at a cruising altitude of about 10,000 feet or lower to ensure passengers had adequate oxygen to breath. Meanwhile, the jet-powered Comet flew at 40,000 feet and featured a pressurized cabin, resulting in the plane experiencing much harsher conditions than engineers considered at the time. Moreover, the large square windows installed to give passengers additional comfort actually created additional stress on the frame, with cracks forming at the window and spreading through the cabin before eventually causing failure.

The Unknown Unknown

This example is one of the most well-known manifestations of an “unknown unknown,” or something we didn’t know that we didn’t know. Every possible measure was taken to ensure the planes safety, but factors not known at the time caused the plane to fail anyway.

Regrettably, in safety-critical applications, trial-and-error has traditionally been the way to enhance safety. We push the boundaries of science (and regulatory compliance) to their limits, and releasing a product that we think is safe—until tragedy teaches us it is not.

But this doesn’t have to be the case.

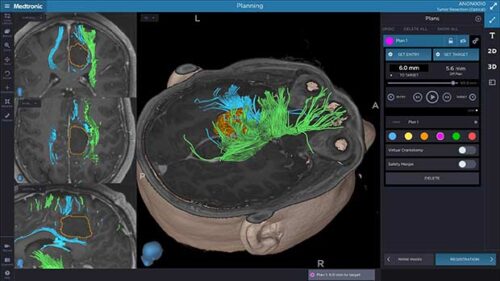

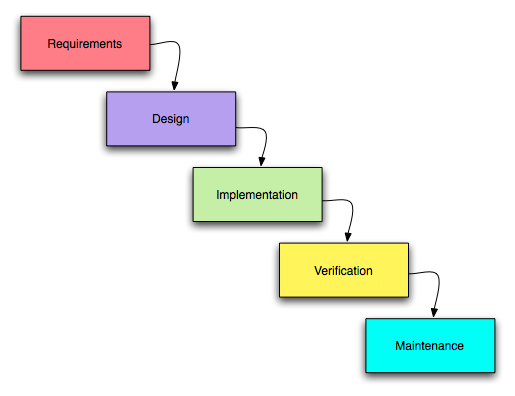

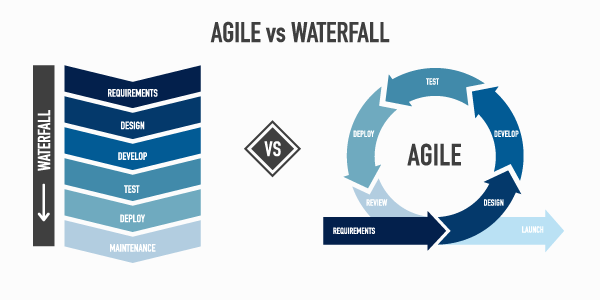

Through a process called Independent Verification & Validation (IV&V), we can uncover possibilities that may not have been considered during the product development lifecycle. While the FAA and FDA require IV&V for all safety-critical applications, just because something is certified does not mean it’s safe.

When performed properly, IV&V can help determine gaps you didn’t even know existed. A thorough requirements analysis as well as unit and systems testing can reduce risk by discovering errors before they occur in the field.

Of course, there’s no guarantee that a thorough testing process will catch every possible flaw. There will always be unknown unknowns. However, taking the process seriously can help identify things you wish you had known before they become a concern.

Testing software concept. Application code test process. Man searching for bugs. Idea of computer technology. Digital analysis. Vector illustration in cartoon style.